On Tuesday 30th, I had the opportunity to return to the same room at UNESCO in Paris where the International Organisations Workshop organised by EDPS and UNESCO took place the week before. The topic was AI regulation once again, highlighting the crucial moment in the international debate on the governance of AI.

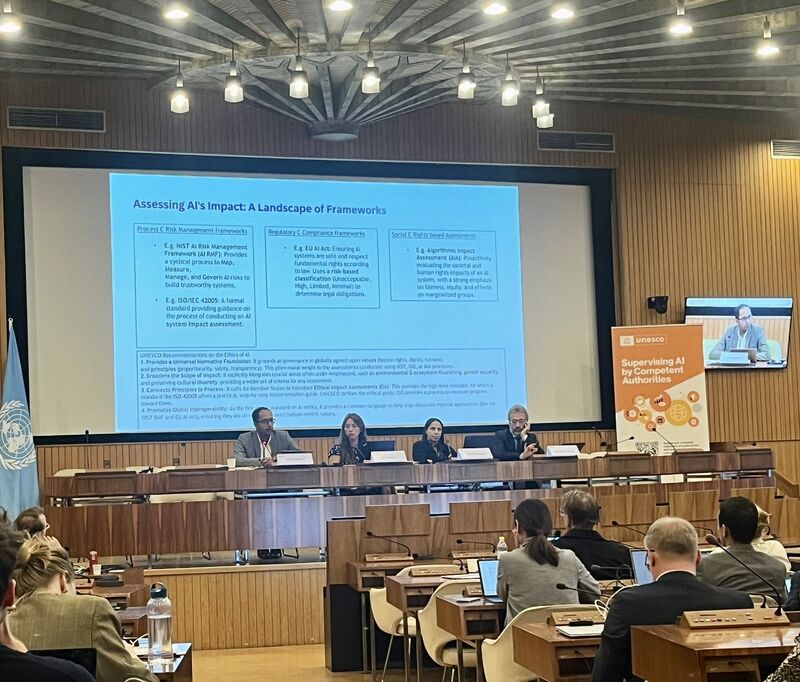

In the context of the Expert Roundtable on Capacity Building for AI Supervisory Authorities, we had a great panel discussion with Nele Roekens and Vikram Nagendra on AI impact assessment.

For the many supervisory authorities convened in Paris, impact assessment not only aligns AI design with communities’ core interests by respecting ethical values and fundamental/human rights and minimising potential safety risks, but it is also a powerful tool that enables these authorities to understand WHAT issues the AI system has raised or raises, HOW these issues have been addressed and WHEN the impact assessment must be revised.

From this perspective, impact assessment goes beyond mere reporting and becomes a tool for checking the design approach adopted, facilitating proactive engagement with AI operators (e.g. sandboxes), and increasing transparency and accountability throughout a step-by-step compliance journey.

Many questions were raised during the discussion in this regard, about how to organise the procedure internally, who should be involved in it and what competencies are required. All of these highlight the need for a pragmatic approach to AI regulation, focused on best practices and guidelines to support both AI operators and supervisory authorities in creating guardrails for AI innovation and development.

In this regard, UNESCO’s ongoing work on AI is very useful. This work is not limited to the Ethical Impact Assessment tool; it also includes interesting use cases.

I would like to thank Angelica A. Fernández for the invitation and Doaa Abu-Elyounes for moderating the panel and leading such an interesting discussion.

(Photo: Vikram Nagendra’s presentation)